Updated · Feb 11, 2024

Updated · Jan 29, 2024

Aditya is an Azure DevOps and Infrastructure Virtualization Architect with experience in automation,... | See full bio

Florence is a dedicated wordsmith on a mission to make technology-related topics easy-to-understand.... | See full bio

Data obtained from various sources are usually raw, unstructured, and unactionable. This is why data parsing converts raw data and leverages it for business insights and decisions.

Manual data entry and collection are extremely time-consuming. With today’s technology, lots of tools to automate data parsing are on the market to help businesses with their information needs.

In this article, you will discover the best data parsing tools. Continue reading to explore the key features, pricing, and benefits of each tool.

Let’s dive in.

Data parsing means converting unstructured and unreadable data to structured and readable formats. This is the second step of the ETL (Extract, Transform, and Load) data integration process.

Prior to converting, data is first collected. Data extraction involves gathering unstructured, semi-structured, and structured data.

Some of the best data extraction software can complete the entire ETL process since they can be integrated into CRMs, ERPs, or data warehouses. Data extraction tools can also take any of these forms:

With the versatility of most data extraction tools, they can also be used for parsing. Below are ten of the best tools that can help you with your parsing (and extraction) tasks.

Pricing:

Key Features:

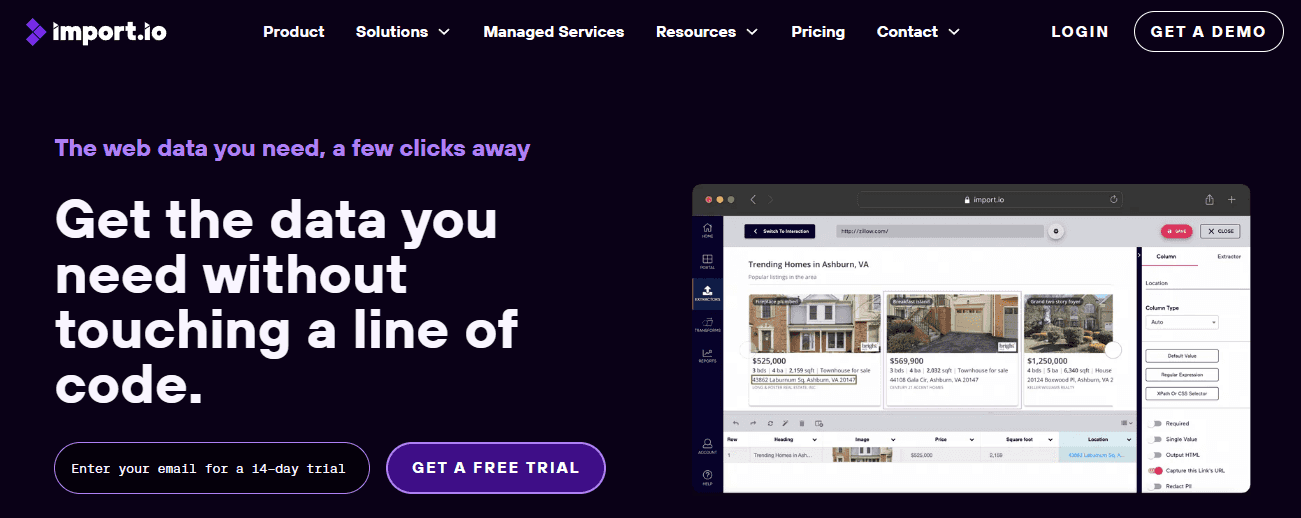

Import.io is a powerful web scraping service with an easy-to-use UI. It uses the point-and-click system and machine learning to suggest the next action automatically.

Their "data behind login" feature makes web data extraction for all site types possible.

Its machine-learning feature optimizes extractors. Import.io does it whenever the user saves their extractors to run it in the shortest possible time. Records of action sequences are saved for each website for an easier workflow.

There is no need to be afraid of testing things, as Import.io provides support for its users. You can try the 14-day trial with no credit card required.

Pricing:

Key Features:

ParseHub is another point-and-click web scraping tool. It requires no programming expertise and has a set of easy-to-understand tutorial videos.

It is a cloud-based service, but you must install their software on your device. The software currently supports Windows, Linux, and MacOS.

The good thing about ParseHub is that you can get a feel for how the software works through the free plan. Getting in five projects with 200 pages each is enough to familiarize oneself with the tool.

They also offer a guaranteed refund if you decide to upgrade your subscription but do not like the service.

Pricing:

Key Features:

Nanonets is a data extraction service that uses AI and machine learning that works in the process of extracting relevant data. The service utilizes text recognition for parsing various types of documents.

A completely automated data pipeline can be created with Nanonet's AI-powered tools. It also keeps getting more accurate as more documents are processed.

The site offers a 7-day free trial, or you can book a call for a demo.

Pricing:

Key Features:

MailParser allows you to parse unstructured information from your recurring emails. You can set up the parsing rules beforehand, and the tool will do the rest.

You can integrate MailParser into any app you choose using webhooks or download the structured data in JSON, XML, CSV, or Excel.

To get accustomed to how the system works, you have the option to sign up for its free plan, which is 30 emails a month for 10 inboxes.

Pricing:

Key Features:

Docparser is a document parsing tool that lets you extract structured information from PDFs, MS Word files, and images. It uses zonal OCR to create presets for the specific data you want to extract.

You can directly connect Docparser to primary cloud storage services like Google Drive, Dropbox, or OneDrive. It can also be integrated into thousands of workplace apps like Workato, Zapier, and MS Power Automate.

You can start Docparser’s 21-day free trial with no credit card required.

Pricing:

Key Features:

Octoparse is a point-and-click data parser tool that can scrape data from online sources. It is a no-code tool with no steep learning curve. Additionally, its powerful AI suggestions can help you customize your workflow.

It can be used for scraping highly sophisticated websites with its automatic IP rotation, which automatically retries requests if needed. Scheduled scraping is also possible, and you can get back your data anytime in JSON, CSV, or Excel.

The free plan includes a generous 10,000 data rows per export, so you can study how the tool performs before committing to a regular plan.

Pricing:

Key Features:

Hevo Data promises a maintenance-free pipeline of data. It is best for moving data from hundreds of sources into your data warehouse. It is a no-code platform that is good for anyone who does not want the hassle of maintaining a pipeline.

Data transfer is also encrypted, so there is no worry about it being intercepted. A helpful dashboard is also available to help you track any delays in data transfer.

A 14-day free trial is available, which is enough to learn about the system.

Pricing:

Key Features:

Web Scraper is a web scraper tool that works as a Chrome extension. It is a surprisingly powerful tool that can scrape online sources through a point-and-click system.

The UI is based on your Chrome browser, making it more intuitive. You can set up presets of “selector sitemaps” for real-time or scheduled scraping. It is a cloud-based service that utilizes a Chrome extension on the user’s end.

Parsed data can be exported to CSV, JSON, and XLSX. You can also directly integrate your exported data into Google Sheets, DropBox, or Amazon S3.

The Chrome extension is forever free, but it does not have proxy support. You can use it to study how the tool works.

Pricing:

Key Features:

Scrapy is an open-source web crawling tool for scraping websites. It runs on major operating systems like Windows, MacOS, and Linux.

You can build crawlers by customizing selectors and deploying your “spiders” to Zyte Scrapy Cloud. Though open-source, Scrapy does not need extensive coding. Everyone with a fair amount of tech knowledge can follow Scapy’s usage tutorials.

Data extracted can also be exported to JSON, XML, and CSV.

Pricing:

Key Features:

Puppeteer is also an open-source library for web crawling. This tool works mainly by controlling a headless (no interface) Google Chrome, but it can also be configured to run “headful.”

You can take screenshots and PDF files of pages, automate form submission and keyboard inputs, and more.

Unlike Scrapy, Puppeteer is more code-intensive and requires workable Javascript knowledge.

The significance of data parsing tools can only be stressed by pointing out their real-life benefits for professionals and modern businesses.

These are the reasons why parsing tools are important:

There are many more things to mention, but these are the most obvious. Today, data parsing has become so indispensable that a whole industry is behind it.

You have many great options for a data parser tool. You must choose the one that best fits your needs. A competitive offer is also a plus.

Trying out open-source solutions can also be rewarding in the long run. You can pay for convenience by choosing the paid options, so better take advantage of the free plans and trials to gauge the tool’s performance.

There are many use cases for parsing techniques. The most common is converting HTML from webpages to pick out relevant data like pricing, listings, etc. The collected data are organized in JSON, XML, CSV, and other readable formats.

The data parsing process has two primary components: lexical analysis and syntactic analysis. Lexical analysis reads every character of the input data to recognize “tokens” (valid words), and syntactic analysis examines the token’s relationship.

Your email address will not be published.

Updated · Feb 11, 2024

Updated · Feb 11, 2024

Updated · Feb 08, 2024

Updated · Feb 05, 2024